Entrainment

Semblance Typology of Entrainments

Sat, 11/08/2014 - 21:36 — AdrianFreedsynonym - together in meaning

synorogeny - form boundaries

conclude- close together

symptosis - fall together

symptomatic - happening together

symphesis - growing together

sympatria - in the same region

symmetrophilic - lover of collecting in pairs

synaleiphein - melt together

For an evaluation of various mathematical formalizations inspired by this entrainment work, check out this paper: https://www.researchgate.net/profile/Rushil_Anirudh/publication/29991298... The ASU team successfully quantify the advantages of metrics such as the one I suggested (HyperComplex Signal Correlation) for dancing bodies where correlated rotations and displacement are in play.

Thanks to:

Entrainment Accounts of Intra-action for Intermedia and Metabody Cybernetics.

Tue, 08/25/2020 - 17:51 — AdrianFreedCelebrating Valérie Lamontagne

Wed, 10/09/2019 - 13:47 — AdrianFreed

Last week the world of wearables lost the light of one our shining stars, Valérie Lamontagne.

I invite you to celebrate her life by reading, citing and responding in your own way to one of her major life’s works: her 2017 dissertation: “Performative Wearables: Bodies, Fashion, and Technology”.

You can find it here: https://spectrum.library.concordia.ca/982473/

She covers some examples used in her dissertation here: https://vimeo.com/196041125

Valérie’s dissertation contributes to the field of wearables by carefully and clearly looking at our field with a combination of two powerful lenses: performativity and non-human agency. Performativity is a well-established lens articulated by Austin for speech acts, made popular by its use for gender by Judith Butler, for power by Jean-François Lyotard, and Karen Barad for cultural and feminist studies of science. Explorations of non-human agency have taken on a recent urgency from the extinction and climate crises. Both these lenses have a long, interesting, convoluted intellectual history so I especially appreciate Valérie’s approach of using extensive case studies of applications familiar to us as a “soft” way into learning the conceptual underpinnings.

What might you be able to do with the deeper understanding that comes from reading this work? It can give you a grasp on the thorny problem of cultural uptake- which designs will have lasting impact? Why do we see the same designs (tropes) being recycled in the field, e.g., cellphone/music player controlling backpacks? It can deepen understanding and appreciation of recent exploratory work in the field. For example, Hannah Perner-Wilson, has been exploring and celebrating the humble pin. She has moved from from pin as a tool, to pin as a material and in her recent whimsical work to pin as an active agent with its own agendas.

I was using performativity, non-human agency and related lenses in my studies of the history of electronic musical instruments while I was in the same program as Valérie at Concordia (INDI). As I surveyed images of the labs. where these musical instruments were created in the last 100 years, I saw one piece of apparatus “photobombing” every picture: the oscilloscope. I no longer see this as a prop or minor tool of the trade. As a non-neutral visualizer of certain sounds it has huge agency over what these electronic musical instruments did. It delayed the adoption of “noisy” and “chaotic” sounds because they don’t visualize well on the traditional oscilloscope. And so it is with the battery of wearable technology. The battery’s promise to deliver power is underwritten by our promise to recharge them. This agency of entrainment has induced “range anxiety” ever since the wearables of the nineteenth century to modern electric vehicles.

As Melissa Coleman and Troy Nachtigall just pointed out, Valérie Lamontagne, did not have a chance to expand and broadly diffuse the fruits of her Ph. D. labor. Dissertation work can easily get lost in libraries and obscure university web sites because it isn’t indexed as well as conference/journal/book publications. It has only 4 citations in scholar.google.com as I write this.

Even as we struggle with our shock and sorrow, let’s read and share her deep and valuable work.

Pervasive Cameras: Making Sense of Many Angles using Radial Basis Function Interpolation and Salience Analysis

Mon, 01/15/2018 - 15:38 — AdrianFreedPervasive Cameras: Making Sense of Many Angles using Radial Basis Function Interpolation and Salients Analysis

Abstract. This paper describes situations which lend themselves to the use of numerous cameras and techniques for displaying many camera streams simultaneously. The focus here is on the role of the “director” in sorting out the onscreen content in an artistic and understandable manner. We describe techniques which we have employed in a system designed for capturing and displaying many video feeds incorporating automated and high level control of the composition on the screen.

1 A Hypothetical Cooking Show

A typical television cooking show might include a number of cameras capturing the action and techniques on stage from a variety of angles. If the TV chef were making salsa for example, cameras and crew might be setup by the sink to get a vegetable washing scene, over the cutting board to capture the cutting technique, next to a mixing bowl to show the finished salsa, and a few varying wider angle shots which can focus on the chef's face while he or she explains the process or on the kitchen scene as a whole. The director of such a show would then choose the series of angles that best visually describe the process and technique of making salsa. Now imagine trying to make a live cooking show presentation of the same recipe by oneself. Instead of a team of camera people, the prospective chef could simply setup a bunch of high quality and inexpensive webcams in these key positions in one's own kitchen to capture your process and banter from many angles. Along with the role of star, one must also act as director and compose these many angles into a cohesive and understandable narrative for the live audience.

Here we describe techniques for displaying many simultaneous video streams using automated and high level control of the cameras' positions and frame sizes on the screen allowing a solo director to compose many angles quickly, easily, and artistically.

2 Collage of Viewpoints

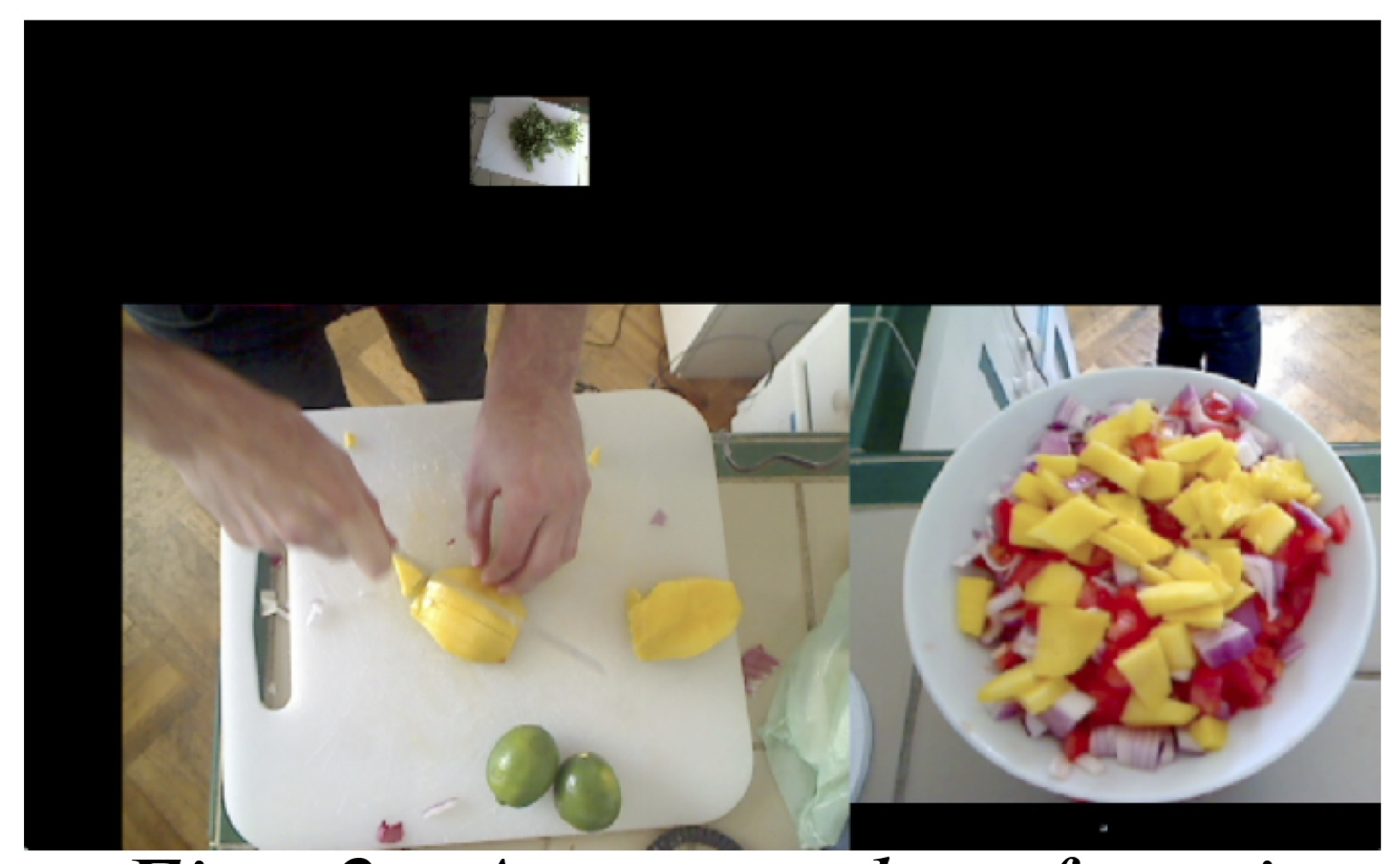

Cutting between multiple view points would possibly obscure one important aspect in favor of another. In the cooking show example, a picture in picture approach would allow the display of both a closeup of a tomato being chopped and the face of the chef describing his or her cutting technique. The multi angle approach is a powerful technique for understanding all of the action being captured in a scene as well as artistically compelling and greatly simplifies the work of the director of such a scene. The multi-camera setup lends itself to a collage of framed video streams on a single screen (fig. 1 shows an example of this multi-angle approach). We would like to avoid a security camera style display that would leave much of the screen filled with unimportant content, like a view of a sink not being used by anyone.

Figure 1: A shot focusing on the cilantro being washed

We focus attention on a single frame by making it larger than the surrounding frames or moving it closer to the center. The system is designed to take in a number of scaling factors (user-defined and automated) which multiply together to determine the final frame size and position. The user-defined scaling factor is set with a fader on each of the video channels. This is useful for setting initial level and biasing one frame over another in the overall mix. Setting and adjusting separate channels can be time consuming for the user, so we give a higher level of control to the user that facilitates scaling many video streams at once smoothly and quickly. CNMAT's (Center for New Music and Audio Technologies) rbfi (radial basis function interpolation) object for Max/MSP/Jitter allows the user to easily switch between preset arrangements, and also explore the infinite gradients in between these defined presets using interpolation[1]. rbfi lets the user place data points (presets in this case) anywhere in a 2 dimensional space and explore that space with a cursor. The weight of each preset is a power function of the distance from the cursor. For example, one preset point might enlarge all of the video frames on particular positions in the rooms, say by the kitchen island, so as the chef walks over the kitchen island, all of the cameras near the island enlarge and all of the other cameras shrink. Another preset might bias cameras focused on fruits. With rbfi, the user can slider the cursor to whichever preset best fits the current situation. With this simple, yet powerful high level of control, a user is able to compose the scene quickly and artistically, even while chopping onions.

Figure 2: A screenshot focusing

on the mango being chopped and

the mixing bowl

3 Automating Control

Another technique we employ is to automate the direction of the scene by analyzing the content of the individual frame and then resizing to maximize the area of the frame containing the most salient features. This approach makes for fluid and dynamic screen content which focuses on the action in the scene without any person needing to operate the controls. One such analysis measures the amount of motion which is quantified by taking a running average of the number of changed pixels between successive frames in one video stream. The most dynamic video stream would have the largest motion scaling factor while the others shrink from their relative lack of motion. Another analysis is detecting faces using the openCV library and then promoting video streams with faces in them. The multiplied combination of user-defined and automated weight adjustment determines each frames final size on the screen. Using these two automations with the cooking show example, if the chef looks up at the camera and starts chopping a tomato, the video streams that contain the chef's face and the tomato being chopped would be promoted to the largest frames in the scene while the other less important frames shrink to accommodate the two.

Figure 3: A screenshot of the

finished product and some

cleanup

Figure 3: A screenshot of the

finished product and some

cleanup

Positioning on the screen is also automated in this system. The frames are able to float anywhere on and off the screen while edge detection ensures that no frames overlap. The user sets the amount of a few different forces that are applied to the positions of the frames on the screen. One force propels all of the frame towards the center of the screen. Another force pushes the frames to rearrang their relative positions on the screen. No single influence dictates the exact positioning or size of any video frame; this is only determined by the complex interaction of all of these scaling factors and forces.

4 Telematic Example

Aside from a hypothetical self-made cooking show, a tested application of these techniques is in a telematic concert situation. The extensive use of webcams on the stage works well in a colocation concert where the audience might be in a remote location from the performers. Many angles on one scene gives the audience more of a tele-immersive experience. Audiences can also experience fine details like a performer's playing subtly inside the piano or a bassist's intricate fretboard work without having to be at the location or seated far from the stage. The potential issue is sorting out all of these video streams without overwhelming the viewer with content. This can be achieved without a large crew of videographers at each site, but with a single director dynamically resizing and rearranging the frames based on feel or cues as well as analysis of the video stream's content.

Figure 4: This is a view of a pianist from many angles which

would giving an audience a good understanding of the room

and all of the player's techniques inside the piano and on the

keyboard.

References

1. Freed, A., MacCallum, J., Schmeder, A., Wessel, D.: Visualizations and Interaction Strategies for Hybridization Interfaces. New Instruments for Musical Expression (2010)

Pervasive Cameras: Making Sense of Many Angles using Radial Basis Function Interpolation and Salience Analysis, , Pervasive 2011, 12/06/2011, San Francisco, CA, (2011)